AIM: Apple's Autoregressive Image Models

Introduction

Large language models have revolutionized natural language processing with a variety of models being released (i.e., ChatGPT, Mixtral, Gemma, Gemini, Llama, etc). But how about computer vision tasks? Can the success of LLMs be replicated for computer vision tasks?

The answer to the above question comes from Apple with AIM. AIM (Autoregressive Image Transformers) is a collection of vision models pre-trained with an autoregressive objective, inspired by the textual LLMs, to learn high-quality visual representation. The models exhibit similar desirable scaling properties, as textual LLMs, which is one of the key factors that contribute to their success.

Architecture

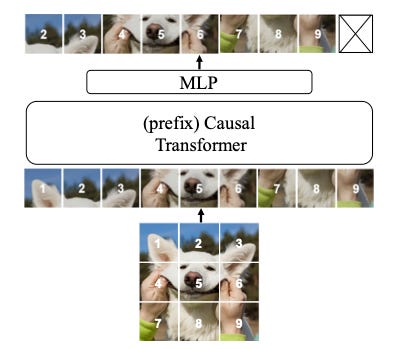

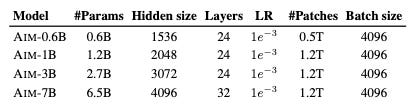

The core model is a standard Vision Transformer (ViT) but pre-trained with a straightforward autoregressive objective inspired by language modeling prioritizing expanding width rather in depth. Specifically, an input image is split into a sequence of non-overlapping patches. The model is then trained to predict the pixels of each patch conditioned on the previous patches in a raster scan order. The training loss is the negative log-likelihood of these probabilities, aiming to learn the true image distribution. The basic loss is a normalized pixel-level regression, minimizing the L2 distance between predicted and actual patch values. An overview of the architecture is illustrated in Fig. 1. An overview of the design parameters and the amount of data for each model is summarized in Table 1.

Some key design choices and innovations include:

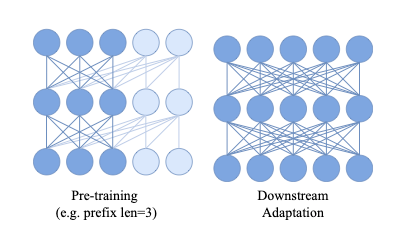

Prefix Attention: During pre-training, AIM uses a prefix attention mechanism, as in T5. For the first few "prefix" patches, attention is allowed to be bidirectional. But for the remaining patches, a causal mask is applied to prevent attending to future positions. This allows easy transitioning to bidirectional attention during finetuning without architectural changes.

Token-level Prediction Heads: To prevent the trunk features from overfitting to the pre-training objective, AIM uses heavily parameterized MLP prediction heads on top of the final transformer layer, processing each patch individually. These heads specialize in low-level pixel prediction, allowing the trunk to learn more transferable high-level representations.

Autoregressive Pattern: The training objective is that of a standard autoregressive model applied on a sequence of image patches. For the specific order of traversal that is used to facilitate the next token prediction, a raster (row-major) ordering was shown to work best compared to other patterns (i.e., spiral, checkerboard, random). The simple raster pattern presents a more uniform difficulty across the sequence compared to patterns that get easier towards the end.

Stable & Straightforward Training: Unlike BERT-style masked prediction used in prior work, AIM's autoregressive objective is found to be more effective for pre-training visual representations that transfer well. Moreover, AIM can be trained at scale without any special tricks or stabilizing mechanisms required by supervised or other self-supervised methods.

Efficient “Attentive Probe” Downstream adaptation: AIM focuses on fixed-weight models for downstream tasks, training only a classification head to cut adaptation costs and lessen overfitting risks. Unlike contrastive learning that uses global image descriptors, AIM calculates loss per patch and employs attention pooling on these patches to form a global descriptor, boosting performance with few additional parameters. This Attentive Probe method retains linear probing's low parameter count and reduced overfitting risk while improving performance.

Pre-training Dataset

The models are pre-trained on the DFN dataset, selected from 12.8 billion Common Crawl image-text pairs, and refined to 2 billion (DFN-2B) top-ranked images by filtering out inappropriate content, blurring faces, and removing duplicates, without content-based curation. This process enables the use of broader image collections with varying alignments. Pre-training combines 80% from DFN-2B and 20% from ImageNet-1k, following large language model (LLM) practices of prioritizing high-quality data, resulting in the DFN-2B+ dataset.

Key Results

AIM models scale gracefully up to 7 billion parameters with a vanilla Transformer, requiring no stability tricks or hyper-parameter tuning.

There is a clear correlation between the pre-training loss and downstream performance across many datasets.

On 15 recognition benchmarks, AIM models outperform prior state-of-the-art methods like MAE.

Figure 5: Downstream evaluation with a frozen trunk. Assessment of the quality of AIM features by evaluating against a diverse set of 15 image recognition benchmarks. AIM and the baseline methods are evaluated using attentive probing with a frozen trunk. AIM models exhibit a strong performance across all benchmarks, especially the AIM-7B. AIM outperforms all other methods, using joint-embedding or generative approaches, except for DINOv2 which utilizes higher-resolution images, that typically result in a 1-1.5% improvement on ImageNet for instance. †: Extracting features from the 20th layer instead of the last (32nd). (source: https://arxiv.org/pdf/2401.08541.pdf) The largest 7B AIM model achieves 84% top-1 accuracy on ImageNet with a frozen trunk.

No signs of performance saturation even at 7B parameters and 2B pre-training images.

Limitations

AIM stands out for its ability to scale smoothly and make effective use of vast amounts of uncurated image data. However, alternative approaches present various trade-offs:

MAE achieves high sample efficiency, learning robust representations with less pre-training data thus reducing the overfitting risks

Contrastive learning techniques currently yield stronger representations for the same model size compared to generative methods like MAE and AIM. However, they face significant scalability and objective complexity challenges, making loss management more difficult.

References

[1] https://arxiv.org/pdf/2401.08541.pdf